Antoni-Joan Solergibert

tj.solergibert@gmail.com

Biography

Hi 👋🏼,

I’m Toni, a MSc student in High Performance Computing deeply interested in accelerating AI applications. I consider myself a high-energy individual ⚡️ who is passionate about learning new things. My main area of research lies in the optimization of Large-Scale LLM training, both at the hardware and software levels. I thrive on experimenting with and pushing the boundaries of new state-of-the-art technologies to achieve maximum performance, rather than just sticking to theoretical concepts. I love writing down everything I learn and sharing it with the community!

Currently I’m in Lausanne 🇨🇭 doing my Master’s Thesis at the Machine Learning and Optimization Laboratory under the supervision of Professor Mary-Anne Hartley and Professor Martin Jaggi. My efforts are focused on the Meditron project, building the doctors of the future 🤖🩺⛑️! Although my main contributions are related with the the Large-Scale training of the models, I am also interested in other areas such Reinforcement Learning from Human Feedback (RLHF) and evaluation.

Passionate about cycling 🚵🏼, track and field 🏃🏻, and hiking 🏔️. Don’t be surprised if we ever cross paths while I’m training!

- High Performance Computing

- Large-Scale LLM Training

- Distributed Applications

Master's Thesis @ MLO Laboratory, 2024

EPFL, Lausanne

Master in Innovation and Research in Informatics - High Performance Computing, 2022 - 2024

UPC, Barcelona

Bachelor of Telecommunications Engineering - Major in audiovisual systems, 2017-2022

UPC, Barcelona

Recent Posts

Experience & Research Projects

Carrying out my master’s thesis in the Meditron project under the supervision of Professor Mary-Anne Hartley and Professor Martin Jaggi. My contributions include:

- Develop Instruction Tuning pipelines for 8B & 70B models using the Hugging Face 🤗 Stack and DeepSpeed for sharding the models among several nodes.

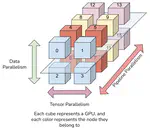

- Develop efficient input data pipelines for Nanotron. The pipelines support recovering from failures, Data & Tensor & Pipeline parallelism, and tests to verify its functionality. [GitHub PR], [Blog]

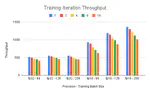

- Conduct a scaling study of the cluster to find the optimal configuration for model training. Benchmark of the effects of activation checkpointing, different optimizers, and several types of precision, among others.

- Optimize the software stack and develop guidelines for running distributed PyTorch applications among several nodes for the EPFL cluster with the runai scheduler.

Member of the UPC - Providence+ team participating in the XPRIZE: Rainforest competition. During my stay in the project I carried out my bachelor’s thesis under the supervision of Professor Ferran Marqués and Professor Xavier Giró-i-Nieto. My contributions include:

- Research of synthetic data generation techniques. Develop an end-to-end pipeline to generate synthetic datasets with pixel-level segmentations based on SOTA techniques.

- Train models to classify images between +1000 animal species with contrastive learning techniques.

- Accelerate model training with multiple GPUs, enabling data parallelism, developing efficient input data pipelines and mixed precision.

My main tasks were:

- Prioritize incidents in the Cellnex network according to their risk and act remotely to avoid their impact, completing their resolution by coordinating local operations if necessary.

- Active supervision of the Cellnex network to anticipate breakdowns.

- Employing software for remote configuration of Cisco, NEC, Siae and Marconi equipment among others.